Hi Stephane,

Thanks for your advice.

In a forced choice like this, the fact that one alternative is free (am I right in assuming that?) will really impact your cost coefficient.

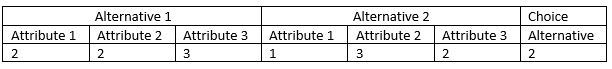

In the forced choice sets, respondents have to choose one of three (hypothetical) alternatives. None of these corresponds to the current product, nor do they contain alternative-specific constants (e.g. that alternative 1 is always a specific type of product, e.g. “train” instead of “car”). Thus, model 1 - the forced choice model - has one coefficient less than model 2 (the free choice model), which contains an alternative-specific constant for the current product. I could also omit the alternative-specific constant in model 2, since I can describe the current product of the respondents with my attributes and levels. However, I think that I would probably ignore the status quo effect by doing so.

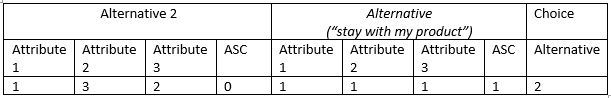

Furthermore, model 2 has only two alternatives, since the respondents only had to choose between the (hypothetical) new product chosen in model 1 and the current, old product. So it was just a situation where the survey participants were asked whether they would switch to this preferred alternative (yes/no). The number of observations is the same in both models, i.e. 12 choice sets per participant.

If I understand it correctly, in joint estimation, not all variables need to be present in both models, but they may differ in some. It is (only) important that the error terms of the two sub-models have an expected value of zero, i.e. it is actually important to insert the alternative-specific constant so that it incorporates the status quo effect.

I followed Apollo example 22 and estimated the joint model, both in preference space and in WTP space using Bayes estimation. SDR stands for "Separated Dual Response", i.e. this is model 2, and CBC is model 1.

Code: Select all

apollo_fixed = c("b_asc_2", "mu_SDR")

[...]

b_asc_1 = "N",

b_asc_2 = "F",

mu_CBC = "F",

mu_SDR = "F",

[...]

hIW = TRUE,

priorVariance = 1,

degreesOfFreedom = 30,

[...]

# Define settings for MNL model component

mnl_settings = list(

alternatives = c(alt1=1, alt2=2, alt3=3),

avail = list(alt1=1, alt2=1, alt3=1),

choiceVar = Choice,

V = lapply(V, "*", mu_CBC),

rows = (ModelType=="CBC")

)

# Compute probabilities using MNL model

P[['CBC']] = apollo_mnl(mnl_settings, functionality)

[...]

mnl_settings = list(

alternatives = c(alt1=1, alt2=2),

avail = list(alt1=1, alt2=1),

choiceVar = Choice,

V = lapply(V, "*", mu_SDR),

rows = (ModelType=="SDR")

)

# Compute probabilities using MNL model

P[['SDR']] = apollo_mnl(mnl_settings, functionality)

### Combined model

P = apollo_combineModels(P, apollo_inputs, functionality)

In both, preference space and WTP space, mu_CBC is < 1, at about 0.85.

After I had read the paper "Bradley, Daly (1997) - Estimation of Logit Choice Models Using Mixed Stated-Preference and Revealed-Preference Information", I made small changes regarding the alternative-specific constant.

Preference space:

Code: Select all

V[['alt1']] = b_asc_1_value + mu_SDR * ( b_Att1Lvl2_value * Att1Lvl2.1 + b_ Att1Lvl3_value * Att1Lvl3.1 + b_ Att2Lvl2_value * Att2Lvl2.1 + b_ Att2Lvl3_value * Att2Lvl3.1 + b_ Att2Lvl4_value * Att2Lvl4.1 + b_ Att3Lvl2_value * Att3Lvl2.1 + b_ Att3Lvl3_value * Att3Lvl3.1 + b_Price_value * Price.1)

V[['alt2']] = b_asc_2_value + mu_SDR * ( b_Att1Lvl2_value * Att1Lvl2.2 + b_ Att1Lvl3_value * Att1Lvl3.2 + b_ Att2Lvl2_value * Att2Lvl2.2 + b_ Att2Lvl3_value * Att2Lvl3.2 + b_ Att2Lvl4_value * Att2Lvl4.2 + b_ Att3Lvl2_value * Att3Lvl2.2 + b_ Att3Lvl3_value * Att3Lvl3.2 + b_Price_value * Price.2)

mnl_settings = list(

alternatives = c(alt1=1, alt2=2),

avail = list(alt1=1, alt2=1),

choiceVar = Choice,

V = V,

rows = (ModelType=="SDR")

Code: Select all

V[['alt1']] = b_Price_value * wtp_asc_1_value + b_Price_value * mu_SDR * ( wtp_asc_1_value + wtp_Att1Lvl2_value * Att1Lvl2.1 + wtp_ Att1Lvl3_value * Att1Lvl3.1 + wtp_ Att2Lvl2_value * Att2Lvl2.1 + wtp_ Att2Lvl3_value * Att2Lvl3.1 + wtp_ Att2Lvl4_value * Att2Lvl4.1 + wtp_ Att3Lvl2_value * Att3Lvl2.1 + wtp_ Att3Lvl3_value * Att3Lvl3.1 + Price.1)

V[['alt2']] = b_Price_value * wtp_asc_2_value + b_Price_value * mu_SDR * ( wtp_asc_2_value + wtp_Att1Lvl2_value * Att1Lvl2.2 + wtp_ Att1Lvl3_value * Att1Lvl3.2 + wtp_ Att2Lvl2_value * Att2Lvl2.2 + wtp_ Att2Lvl3_value * Att2Lvl3.2 + wtp_ Att2Lvl4_value * Att2Lvl4.2 + wtp_ Att3Lvl2_value * Att3Lvl2.2 + wtp_ Att3Lvl3_value * Att3Lvl3.2 + Price.2)

In this case, only the common variables are scaled, but not the alternative-specific constant, which only exists in model 2. Here are the results:

Preference space:

Code: Select all

b_asc_2 0.0000 NA

mu_CBC 0.7782 0.0298

mu_SDR 1.0000 NA

Code: Select all

wtp_asc_2 0.0000 NA

mu_CBC 0.7249 0.0325

mu_SDR 1.0000 NA

The scaling parameter seems to be significantly different from 1. On the other hand, it is not a (simple) MNL model as in Apollo example 22 or described in the papers, but a MIXL model with Bayesian estimation. Nevertheless, even if I set hIW = FALSE and gFULLCV = FALSE, the parameter mu_CBC increases only slightly.

Kind regards

Nico